Building Your Own MCP Server

with LlamaIndex

A Comprehensive Guide to Tool Interfaces for AI Integration

Note: The Meta-Control Protocol (MCP) described herein is currently a conceptual framework discussed by me rather than an officially released standard.

The examples provided are for educational purposes and use verified tools where available, with clear disclaimers for any hypothetical elements.

1. Introduction: What Is MCP and Why It Matters

The Meta-Control Protocol (MCP) is envisioned as a universal interface—a “USB-C port for AI”—that enables large language models (LLMs) to interact with diverse external tools and data sources (e.g., databases, APIs, file systems) without requiring custom integrations. Although still conceptual, MCP has been discussed in industry circles as a means to standardize connections between AI systems (like Claude) and external services, simplifying development and enhancing security.

2. Understanding the Fundamentals

Key Components of MCP

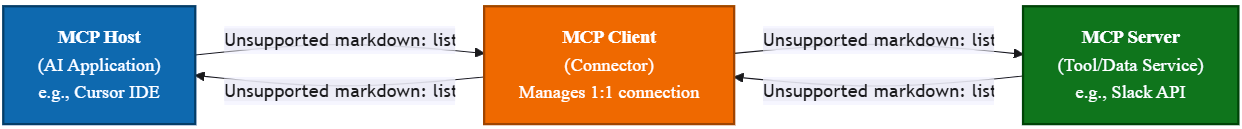

MCP follows a client–server model with these primary roles:

MCP Host:

The AI application (e.g., an LLM-powered chatbot or code editor) that requires external data or capabilities.MCP Client:

A logical connector within the host that manages a one-to-one relationship with an MCP server. It handles message exchanges and negotiates capabilities.MCP Server:

A lightweight service that exposes specific tools or data through standardized protocols. It enforces authentication (via methods like OAuth2/OIDC) and permission scopes.

Architecture Diagram

Below is a colorful Mermaid diagram that illustrates the interaction between the components:

3. Getting Started with MCP Development

Why a Server?

An MCP server acts as a dedicated helper for your AI application. It runs the backend logic to access external resources and perform actions—serving as the bridge between your LLM and external data.

Setting Up a Basic Python Server

For beginners, using Python with frameworks like FastAPI or Flask is an ideal starting point.

FastAPI Example with API Key Authentication

from fastapi import FastAPI, Security, HTTPException

from fastapi.security import APIKeyHeader

app = FastAPI()

API_KEY_NAME = "X-API-KEY"

api_key_scheme = APIKeyHeader(name=API_KEY_NAME, auto_error=True)

@app.get("/health")

async def health_check(api_key: str = Security(api_key_scheme)):

if api_key != "SECRET_KEY":

raise HTTPException(status_code=401, detail="Invalid API key")

return {"status": "healthy"}

# Run with: uvicorn main:app --port 8000 --reload

Flask Example (Corrected for HTTP Methods)

from flask import Flask, jsonify

app = Flask(__name__)

@app.route('/api/data', methods=["GET"])

def get_data():

return jsonify({'message': 'Data retrieved successfully'})

if __name__ == '__main__':

app.run(debug=True)

Tip: Use API development tools (e.g., Postman or Insomnia) to test your endpoints.

4. Core Concepts of MCP Server Development

Protocol Details and Message Structure

An MCP server should adhere to a standardized protocol. For example, using JSON-RPC, a typical request for a document search might look like this:

{

"jsonrpc": "2.0",

"method": "document.search",

"params": {

"query": "LLM integration",

"filters": {"type": "pdf"}

},

"id": 1

}

And a corresponding response:

{

"jsonrpc": "2.0",

"result": {

"documents": [

{"id": "doc1", "title": "LLM Basics", "snippet": "Introduction to LLMs..."}

]

},

"id": 1

}

Error Handling Example

{

"jsonrpc": "2.0",

"error": {

"code": -32602,

"message": "Invalid params"

},

"id": 1

}

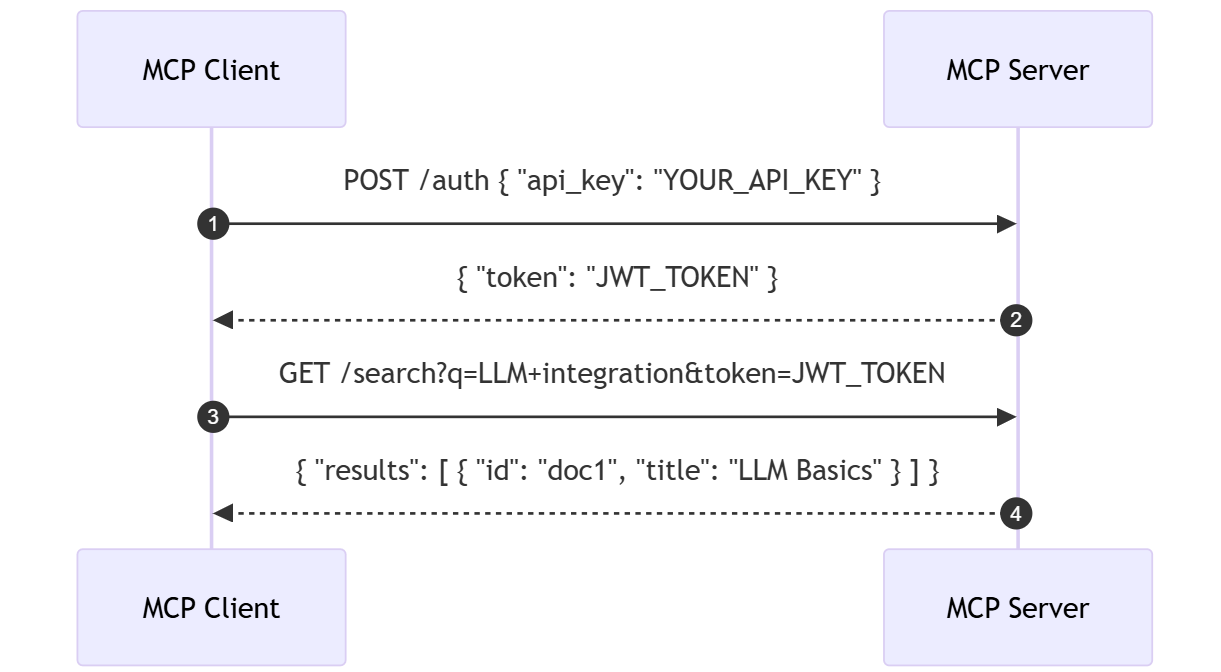

Sequence Diagram for Authentication Flow

Here’s a colorful Mermaid sequence diagram that visualizes an authentication flow:

5. Integrating AI with LlamaIndex

LlamaIndex is a powerful framework for enabling Retrieval-Augmented Generation (RAG) in your MCP server. It helps ingest documents, create vector indexes, and process queries.

Basic Integration: Ingestion and Indexing

from llama_index.core import VectorStoreIndex, SimpleDirectoryReader

from llama_index.embeddings.huggingface import HuggingFaceEmbedding

# Load documents (e.g., PDFs) from the "data" directory

documents = SimpleDirectoryReader("data", required_exts=[".pdf"]).load_data()

# Select an embedding model

embed_model = HuggingFaceEmbedding("sentence-transformers/all-mpnet-base-v2")

# Create and persist the vector index

index = VectorStoreIndex.from_documents(documents, embed_model=embed_model, show_progress=True)

index.storage_context.persist(persist_dir="./storage")

Querying with LlamaIndex

query_engine = index.as_query_engine()

response = query_engine.query("What are the key features of this document?")

print(response)

Advanced users should explore additional index types (e.g., tree indices, keyword table indices) and various data connectors.

6. Building Advanced MCP Server Features

Integrating External Tool Interfaces

Note: Previously referenced hypothetical packages have been replaced. For web search integration, consider using verified libraries or LlamaIndex’s officially supported readers (e.g., llama-index-readers-web if available).

Example: Integrating a Web Search Reader

import os

from fastapi import APIRouter

# Hypothetically, using a verified package from the LlamaIndex ecosystem:

from llama_index.readers.web import WebSearchReader

# Initialize the Web Search reader with your API key

web_search = WebSearchReader(api_key=os.getenv("WEB_SEARCH_KEY"))

web_search_router = APIRouter()

@web_search_router.get("/search")

async def search(q: str):

results = web_search.search(query=q)

return {"results": results}

app.include_router(web_search_router)

Hybrid Retrieval and Observability

Combine multiple retrieval strategies for robust results:

from llama_index.core import KeywordTableIndex

from llama_index.core.query_engine import RouterQueryEngine

from llama_index.selectors import LLMSingleSelector

vector_index = VectorStoreIndex.from_documents(documents, embed_model=embed_model)

keyword_index = KeywordTableIndex.from_documents(documents)

query_engine = RouterQueryEngine(

selector=LLMSingleSelector.from_defaults(),

query_engines=[vector_index.as_query_engine(), keyword_index.as_query_engine()]

)

Enhance observability using Prometheus and OpenTelemetry:

from opentelemetry import trace

tracer = trace.get_tracer("mcp_server")

# Instrument key functions to capture performance metrics and trace execution.

Deepening Security Coverage

Example: mTLS Setup with FastAPI (Conceptual)

# For mTLS, configure your ASGI server (e.g., uvicorn) with TLS certificates:

uvicorn main:app --host 0.0.0.0 --port 8000 --ssl-keyfile=./key.pem --ssl-certfile=./cert.pem

For Vault integration, consult your secret management system’s documentation to load and inject secrets at runtime.

7. Connecting and Utilizing MCP Clients

The Cursor IDE Example

Cursor (an AI-powered code editor) supports MCP via two transport methods: stdio (command) and SSE (Server-Sent Events). Below is a step-by-step configuration guide.

SSE Configuration (cursor-mcp.yaml)

servers:

- name: "Corporate Knowledge Base"

type: sse

url: "https://mcp.example.com/sse"

auth:

type: oauth2

client_id: "cursor-prod"

scopes: ["files:read", "search:execute"]

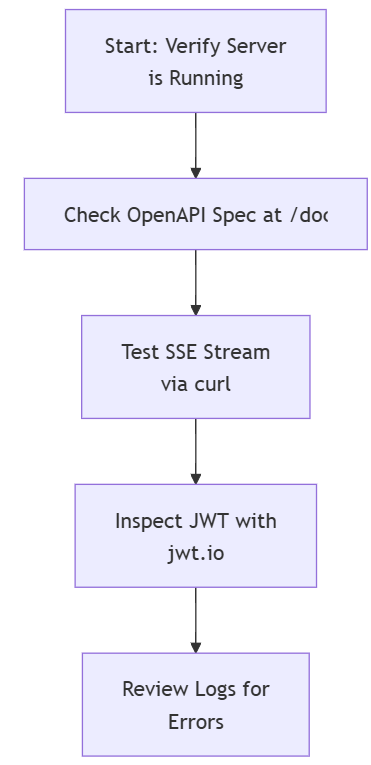

Troubleshooting Flow Diagram

8. Best Practices for Production-Ready MCP Servers

Security

Authentication & Authorization: Use API keys, OAuth2, and enforce strict permission scopes.

Data Encryption: Secure all communications with HTTPS/TLS; consider encrypting sensitive data at rest.

Input Sanitization & Rate Limiting: Validate all incoming requests and implement middleware to prevent abuse.

Auditing: Log all interactions for security monitoring and debugging.

Scalability & Performance

Stateless Design: Build your server to be stateless to support horizontal scaling.

Load Balancing & Caching: Use load balancers and caching mechanisms.

Asynchronous Programming: Utilize Python’s asyncio (or equivalent) to handle concurrent requests.

Monitoring & Deployment

Observability: Collect key metrics (latency, error rates) and use structured logging.

Alerting: Set up alerts for critical events.

Deployment: Consider Docker containers, Kubernetes, or cloud platforms for robust deployments.

9. Contributing to the MCP Ecosystem

For expert professionals looking to contribute:

Specification Contributions: Engage in discussions, propose refinements, and help shape the future MCP standard.

SDK & Client Libraries: Develop tools that streamline MCP server/client creation.

Community Sharing: Publish your MCP servers and integrations in open repositories or marketplaces.

Documentation & Tutorials: Create resources to help others understand and implement MCP.

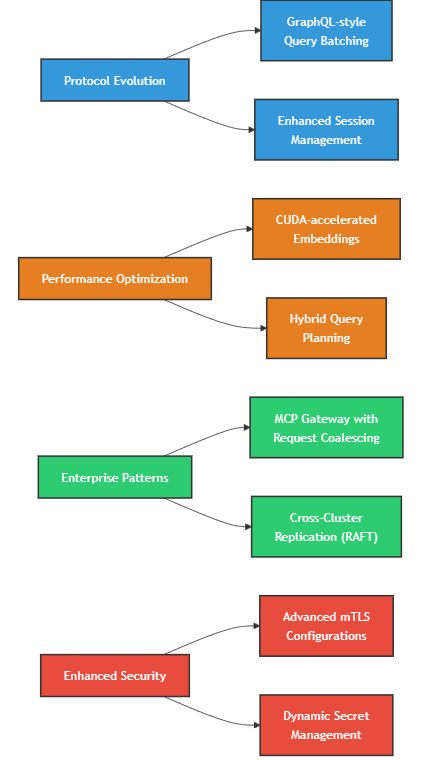

10. Future Directions & Advanced Considerations

The MCP and LlamaIndex ecosystems are likely to evolve. Future areas include:

11. Conclusion

Building your own MCP server with LlamaIndex is a journey—from setting up basic API endpoints to deploying a production-grade AI integration service. This guide has provided a roadmap covering:

Foundational Setup: Establishing a Python backend with FastAPI/Flask.

Protocol and Message Handling: Adhering to standardized JSON-RPC/gRPC formats.

LlamaIndex Integration: Ingesting, indexing, and querying documents for retrieval-augmented generation.

Advanced Features: Integrating external tool interfaces, hybrid retrieval, observability, and robust security.

Client Connectivity: Detailed configuration examples for connecting MCP servers with clients like Cursor.

Production Best Practices: Security, scalability, monitoring, and deployment strategies.

Community & Future Trends: How to contribute and what to expect in the evolving MCP landscape.

By following this comprehensive guide, you can develop a secure, scalable, and interoperable MCP server that leverages the robust capabilities of LlamaIndex while adhering to industry best practices. Whether you’re a beginner or an expert, this roadmap empowers you to build, deploy, and contribute to the future of AI integration.

Sources & Further Reading:

For more details on MCP discussions and emerging standards, refer to industry whitepapers and reputable AI research blogs from Anthropic and other leading organizations.