LangChain: Overview & Use Cases

Episode: Week 2

Abinash:

Hey everyone,welcome back to our podcast on building next-gen AI solutions.

I'm your host, ‘Abinash Mishra, ’ and today we're discussing LangChain, a framework that's quickly becoming essential for developers and leaders working with large language models.

Have you struggled with the complexity of language model calls or found that chatbots lose track of the conversation history?

Well, today's guest, Rahul Singh, has navigated these waters extensively.

Rahul, thanks so much for joining!

Rahul:

Thanks for having me, Abinash!

Excited to be here, and yep—I've absolutely experienced those frustrations firsthand. Working without LangChain was like herding cats—doable, but messy.

Abinash:

Alright. Let's jump right into it, then.

🔸 Segment 1: Why LangChain? Solving Real-World Pain Points 🔸

Abinash:

Rahul, before we dive deep, let me ask you,Q. What problem is solved by Langchain?

Q. Also, why langchain became so popular so quickly?

Rahul:

Great question.

LangChain takes away the complexity of integrating LLMs into applications.

Without it, you end up repeating the same tedious tasks: crafting prompt engineering logic, handling API errors, and manually tracking conversational memory... It's exhausting.

I like to compare LangChain to LEGO blocks for AI—pre-built components that snap together.

Or maybe even a conductor orchestrating the complex instruments of LLM integrations.

Abinash:

Wow Rahul, So nicely you put your analogy!So in simple term, it streamlines workflows and saves huge time.

Rahul:

Exactly.

For developers, that means faster iterations.

For leadership, it translates to significantly reduced technical debt and better strategic outcomes.

🔸 Segment 2: LangChain Under-the-Hood (Core Components Explained) 🔸

Abinash:

Now, let's unpack a LangChain a bit.Rahul, can you quickly walk us through the main building blocks?

Rahul:

Sure.

LangChain’s main components are models, chains, memory, agents & tools, and vector stores integrated via RAG.

Let's quickly break each one down:

Models: LangChain integrates seamlessly with popular models like OpenAI GPT, Anthropic, and Hugging Face—think of it as having a universal remote for all your LLMs.

Chains: Think of chains as your AI assembly line. They organize your workflow—prompt creation, querying models, post-processing responses—in a tidy sequential manner.

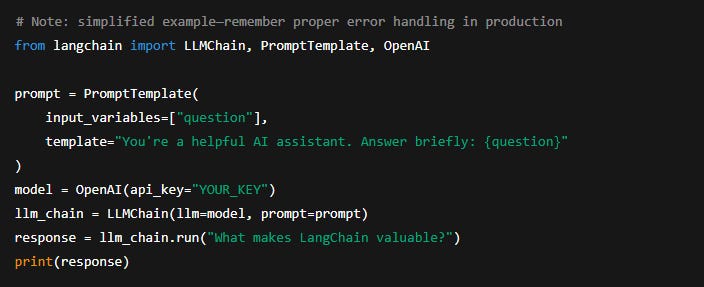

For instance, a basic chain setup in Python looks like this:

And nowadays, most devs are moving toward LCEL (LangChain Expression Language) to simplify complex workflows, especially with async operations.

Memory: Without memory, chatbots can feel robotic—forgetting previous dialogue. LangChain solves this by integrating modules like BufferMemory and ChatMessageHistory, preserving context across interactions.

Agents & Tools: These are like AI project managers—they dynamically select specialized tools or APIs to use in real-time, making intelligent decisions instead of static responses.

Vector Stores & RAG: Lastly, vector stores power something called Retrieval-Augmented Generation (RAG). Imagine an AI-powered internal Google search that instantly pulls relevant documents to enhance responses.

Abinash:

Very well explained, Rahul.One more component was introduced, which is LangSmith. Would you like to shed a little light on it?

Rahul:

Yes!

LangSmith is LangChain’s observability and debugging platform.

It helps monitor, test, and optimize your chains and agents, essential when moving from prototype to production.

🔸 Segment 3: Real-World Applications, Wins & War Stories 🔸

Abinash:

Alright, Rahul, theory’s great—but let’s get practical.Can you share a real-world success story where LangChain genuinely delivered results?

Rahul:

Absolutely.

Recently, a fintech startup I advised combined LangChain and Pinecone (a popular vector DB). Their goal was to tackle slow customer support response times. By leveraging RAG capabilities, the system retrieved precise, relevant documents instantly.

The result? A 40% cut in ticket resolution times. Customers are happier, operations are smoother—real bottom-line impact.

Abinash:

Impressive.But real life isn't always smooth sailing.

Any cautionary tales?

Rahul:

Oh yes!

Another team enthusiastically created LangChain agents to handle customer interactions but overloaded them without proper throttling or logic checks.

The outcome was agents frequently getting stuck or providing confusing outputs, leading to frustrated customers.

Abinash:

Ouch—what’s the big takeaway?

Rahul:

Balance is key.

Start simple, monitor with tools like LangSmith, and scale thoughtfully.

🔸 Segment 4: Strategic Advice, Limitations & Alternatives 🔸

Abinash:

Speaking of scaling thoughtfully, let's zoom out strategically.What should engineering leaders consider before adopting LangChain?

Rahul:

First, understand that it's great for rapid prototyping and flexible integrations, but scaling up might trigger API cost spikes or unexpected complexity.

Keep an eye on your budget.

Second, security and privacy are critical.

LangChain offers basic tools to handle sensitive data, like Personally Identifiable Information (PII) masking.

But ensure you have comprehensive security protocols in place, too.

And lastly, be aware that LangChain isn’t the only game in town.

Alternatives like LlamaIndex, Semantic Kernel, or custom-built solutions might better suit specific use cases.

Evaluate your needs carefully—don’t just jump onto the LangChain train because it’s popular.

🔸 Segment 5: Limitations & Cautions 🔸

Abinash:

We touched briefly on limitations earlier.Anything else our listeners should know before diving into LangChain?

Rahul:

Definitely.

LangChain, despite simplifying many tasks, has a steeper learning curve initially.

It requires a foundational understanding of LLM workflows and prompt engineering.

Also, the complexity of chains and agents, if not managed well, can lead to performance overhead—monitoring with LangSmith helps manage this.

Lastly, dependency on external LLM APIs introduces vendor lock-in risks.

Always have backup plans or alternative providers ready.

🔸 Segment 6: Wrap-Up, Recap, and Call-to-Action 🔸

Abinash:

Rahul, this has been incredibly insightful.

Let’s quickly summarize the key takeaways:

LangChain simplifies building LLM apps by modularizing workflows.

Core features: Models, Chains, Memory, Agents & Tools, Vector Stores (RAG), and LangSmith observability.

Practical applications like cutting ticket response time dramatically.

Be cautious of complexity, scaling costs, and security/privacy considerations.

Alternatives exist—choose carefully based on your context.

Rahul, what would you recommend listeners do next?

Rahul:

Don’t wait—experiment!

This week, build a simple LangChain-based bot.

Share your experiences—good or bad—with us online; use the hashtag #LangChainStruggles.

Let’s learn together.

Abinash:

Love that challenge. And listeners, don't forget next episode—we’re hacking LangChain to create a meme-generating AI.

So prepare your weirdest prompts!

Rahul, thanks again for your wisdom and time.

Rahul:

My pleasure, Abinash! Thanks for having me.

Abinash:

To our audience—thanks for tuning in.

I'm Abinash Mishra, reminding you, stay curious, experiment fearlessly, and build something remarkable.

Catch you next time!